The data volume that organizations are building, analyzing and storing is larger than ever before. Only the organization that is able to faster deliver insights and manage fast-growing infrastructure can be the leader in the industry. To deliver these insights, the foundational storage of an enterprise must be able to support both the Big Data in the new era and traditional applications and provide excellent security, reliability and high performance. As a high-performance solution for managing large-scaled data, IBM Spectrum Scale provides unique archiving and analytics capabilities to help you address these challenges.

Experiment: Migration Policy Demonstration

Experiment Content:

Start Your Experiment

View Experiment Manual

Firefox and Chrome Browser are recommended

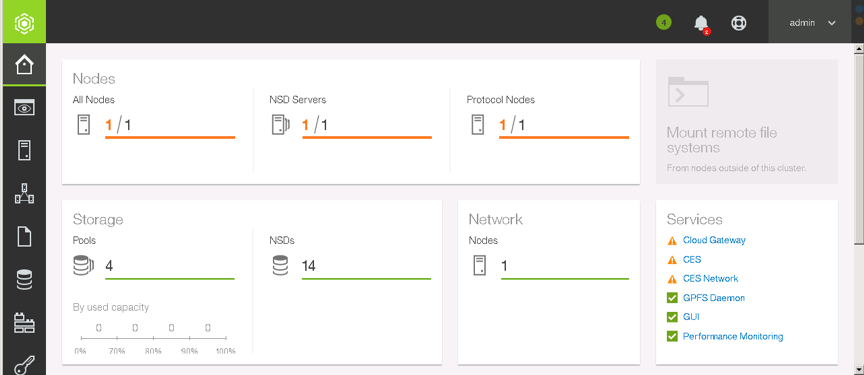

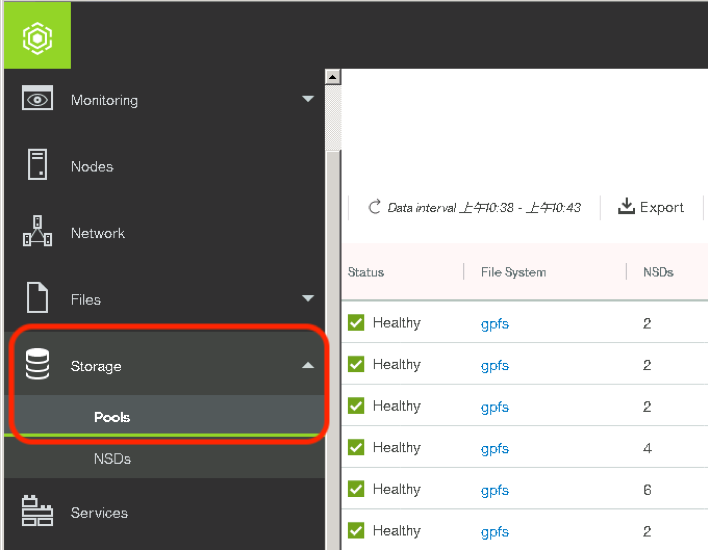

This experiment is intended to let you understand the basic operations and concepts of the migration policies in IBM Storage Scale (GPFS) parallel file system.

Challenges: Intelligent Allocation of Storage Space

Challenge Background:

At a small Internet company, high-performance storage is very precious. When this resource pool reaches a certain threshold, manual data migration is needed. Please help the company to complete the automatic migration and allocation of storage resource pool based on the migration rules of Storage Scale.

Challenge Goal:

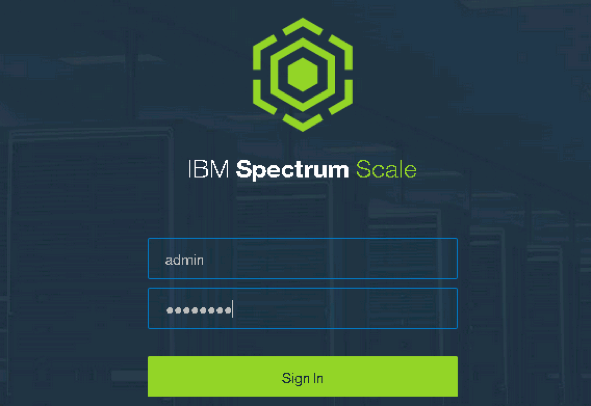

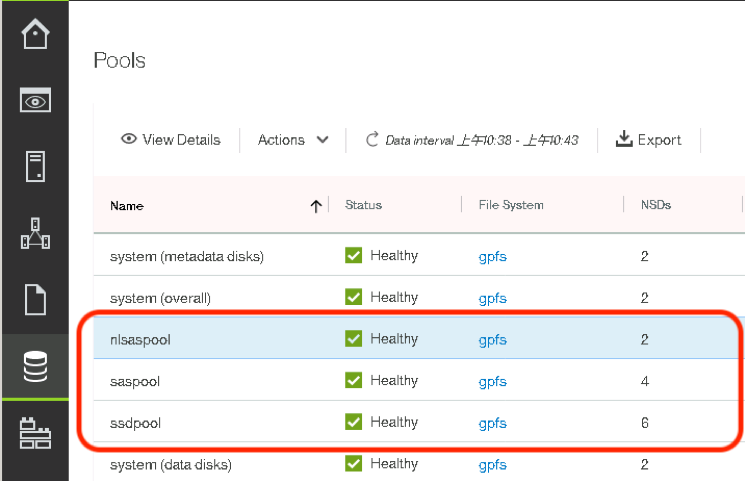

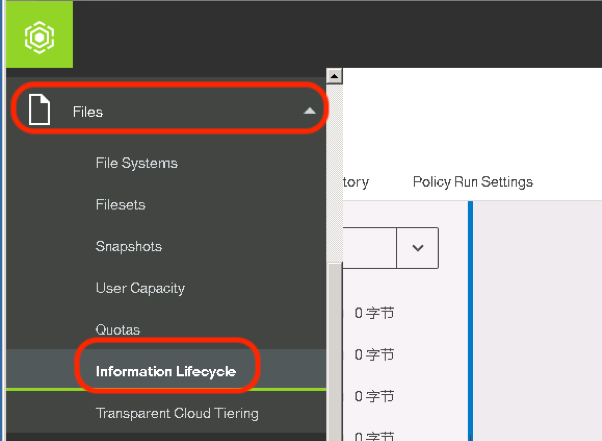

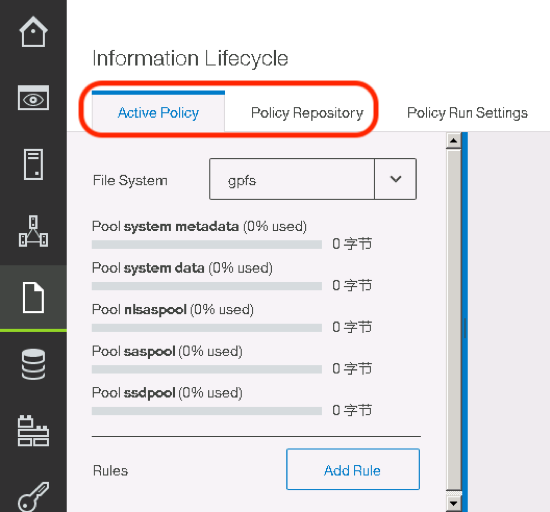

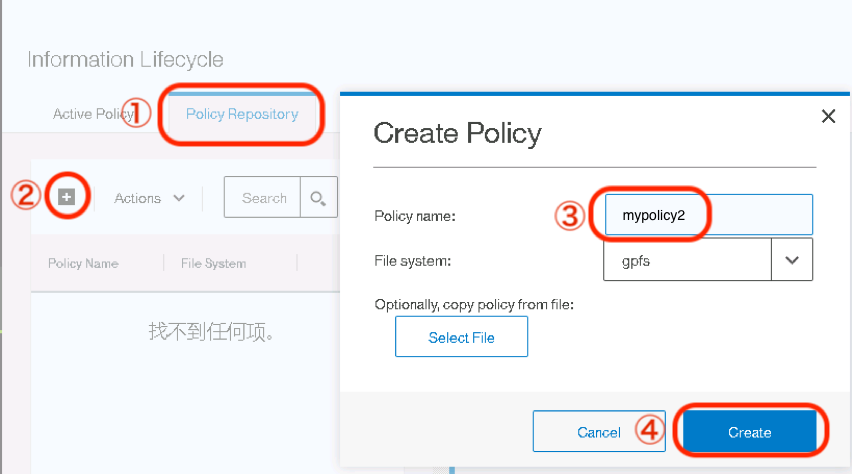

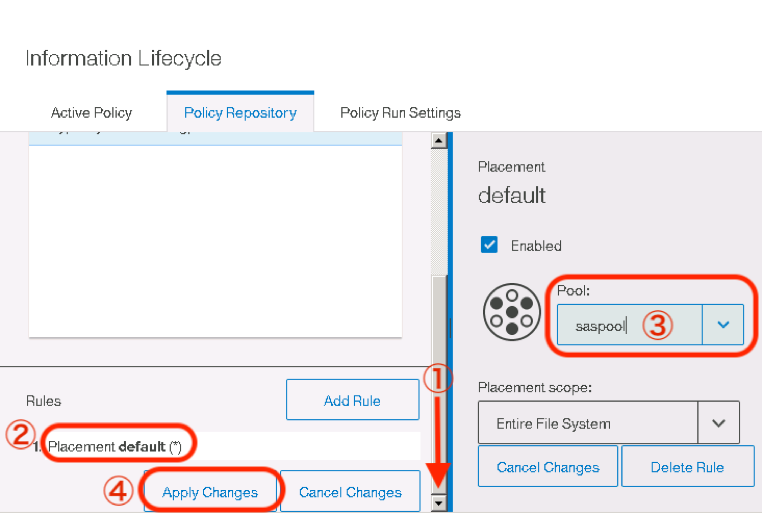

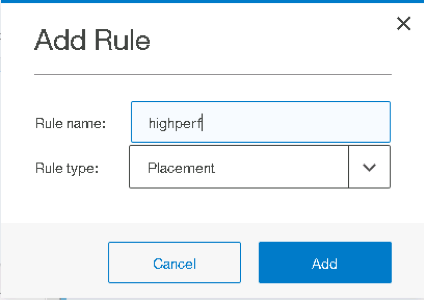

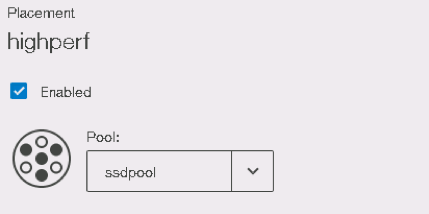

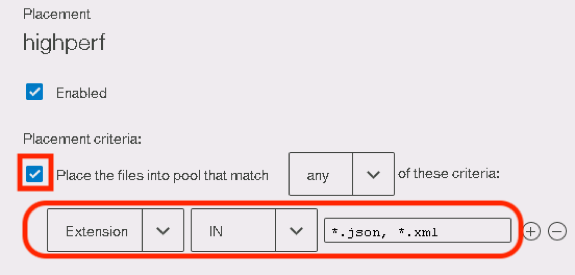

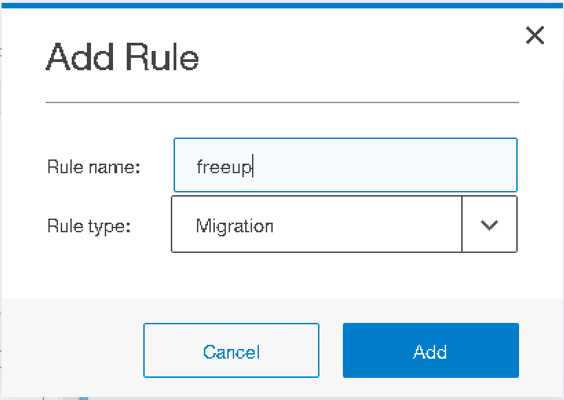

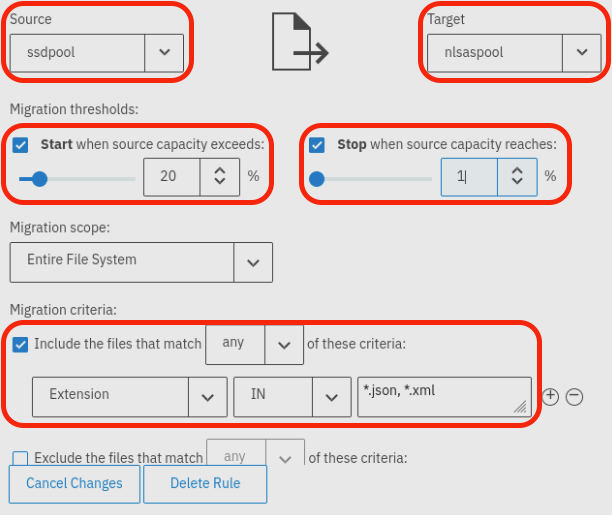

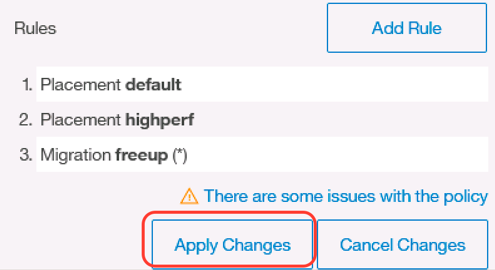

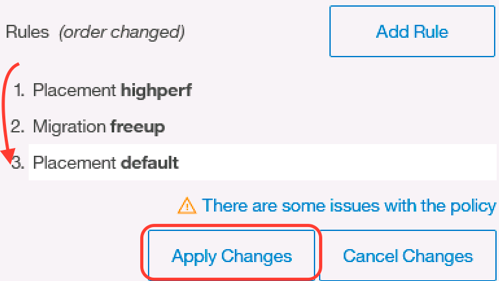

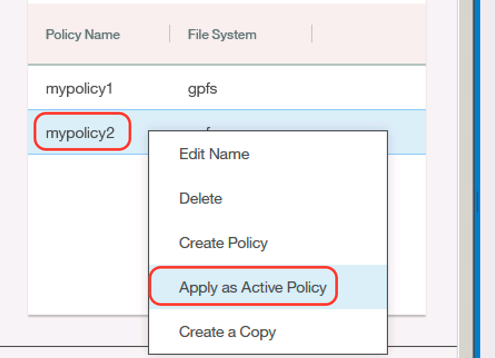

Log as admin/admin001 onto the graphic management interface of Storage Scale and configure a migration rule by which when the space usage of the resource pool "ssdpool" exceeds 20%, the files of json and xml formats would be migrated into the resource pool "nlsaspool" so as to release the space of ssdpool until it has 99% available space, then use the command "dd if=/dev/zero of=test.json bs=1M count=1000" to simulate writing files into the directory /gpfs to trigger migration.

Challenge Rules:

1. Once the challenge starts, the system will start timing for 30min.

2. Click the "Submit Results" button on the upper left corner after the challenge task is completed.

3. The system evaluates your performance automatically and gives your challenge results and score.

4. Your score is ranked according to your time taken to challenge. The shorter your time is, the higher your rank is.

Medal Status

Challenge Ranking List

| Rank | Nickname | Time |

|

mengweihangzhou | 5s |

|

80468165 | 2:1s |

|

caizc | 2:47s |

| 4 | 柠檬糖ㄨ梦 | 2:50s |

| 5 | lg_13606 | 3:54s |

Discovery:Migration Policy Demonstration

Experiment Content:

This experiment is intended to let you understand the basic operations and concepts of the migration policies in IBM Storage Scale (GPFS) parallel file system.

Experiment Resources:

- IBM Storage Scale 5.0.1 software

Red Hat Enterprise Linux 7.4 (VM)

Tips

1. Discovery provides longer time for your experience;you are home free

2. Data will be cleared after the end of discovery

3. It is needed to finish the experiment and challenge first to start your discovery